I kept reading about Retrieval-Augmented Generation (RAG) systems and wondering how powerful they really were. They were often presented as a key building block for practical LLM applications, but I wanted to understand their strengths and limitations firsthand. The best way to do that felt simple: try to build one myself.

The core idea behind RAG immediately clicked for me. I let it retrieve relevant information at query time and then reason over it. This approach felt simpler and more honest than fine-tuning, especially for a small project. My system takes a collection of documents, splits them into chunks, converts those chunks into embeddings using OpenAI’s API, and stores them in a FAISS index. When a user asks a question, the system retrieves the most relevant chunks and injects them into a prompt so the model can generate an answer grounded in real context.

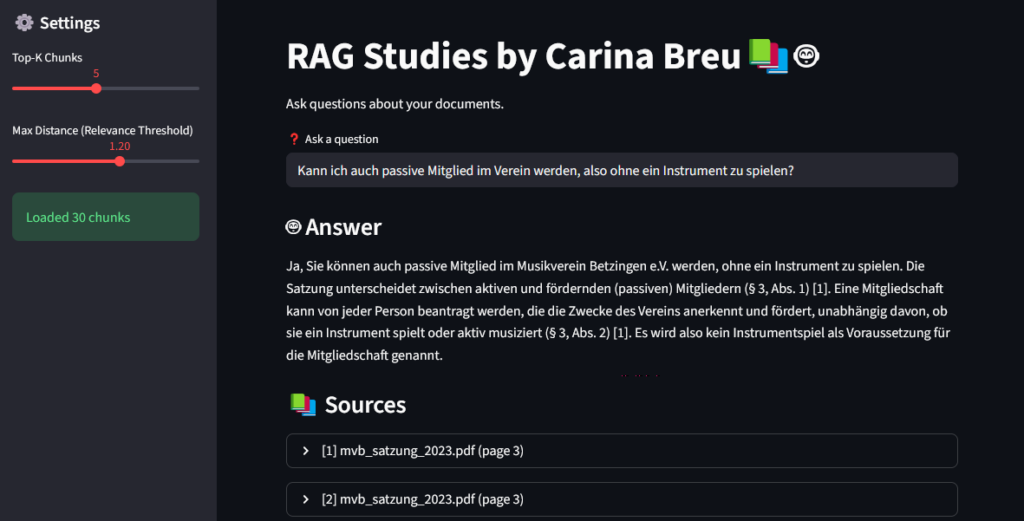

Pairing this with Streamlit made the project far more enjoyable. Having a simple interface where I could type a question, see which document chunks were retrieved, and then read the final answer created a tight feedback loop that made debugging much easier. It turned what could have been a backend experiment into something that felt like a real application.

To test my system, I used documents in PDF about billing, constititution and a general flyer of my music club, where I play the clarinet. And it worked out well from the beginning. When I asked the program about the prices of clarinet lessons, it perfectly summarized the options offered by the club. In my next question I asked the chatbot about honorary membership, but did not get results right away. The bot informed me instantly, that it lacks information about this topic. I soon realized, that the constitutional document was not loaded correctly and I tried it again. And this time it worked out again perfectly.

The biggest lesson I took away from this project is that RAG systems don’t fail loudly—they fail quietly. When answers are bad, it’s usually because the wrong context was retrieved, not because the model is weak. Being able to inspect that retrieved context is essential. Building this system helped demystify RAGs for me and shifted my thinking from “how smart is the model?” to “how good is the information I’m giving it?”

For anyone interested in the logic behind LLMs, I’d strongly recommend building a small RAG project. It’s one of the most practical ways to learn how these systems actually work, and it forces you to think about data, retrieval, and constraints rather than treating the model like a black box.

If you are interested in more details of my RAG project, checkout my Github project here.