Introduction

Do you know those dog people, who know everything about their pawed companion and can tell the breed of every dog they meet, when going for walk?

I do definitely not belong to these people. For me, some dogs look more similar than others to each other, but I would never be sure about the breed of any dog, since I only know the most common breeds, like Malthese Dog, Poodle, Dachshund and German Shepherd.

To get an idea of the difficulty of distinguishing between different dog breeds, Udacity’s Dog Breed Classifier Notebook contains some dog breed image examples, that I want to share with you:

These two dogs look very similar, however, they belong to different breeds.

Brittany

Welsh Springer Spaniel

On the other side, these three different looking dogs are all Labrador Retrievers.

Now imagine, that there would be a program, which could identify the dog breed by just uploading a photo…

Project Definition

This is, where the capstone project of my nanodegree in Data Science at Udacity starts. Following along a Jupyter Notebook from Udacity, I worked along serveral excercises, to build up different Convolutional Neural Networks (CNNs) to recognize humans and dogs and distinguish between the breeds of uploaded dog photos. The project ranges from

- using a prepared face detector and evaluating its performance over

- setting up a dog detector from the ResNet50 model and

- setting up a CNN from scratch to

- adapting the widely known CNN Xception to the dog breed use case using transfer learning.

To train and test these models Udacity provided a dataset, which contains 8351 images of 133 different dog breeds, subdivided into train data (80%), validation data (10%) and test data (10%) together with 13233 human images.

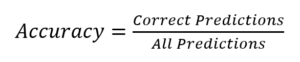

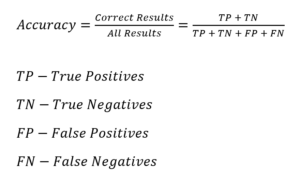

For the evaluation of the described models, I apply the metric accuracy. It is calculated as the ratio between correct predictions and the number of overall predictions and can be used for both binary and multiclass classification.

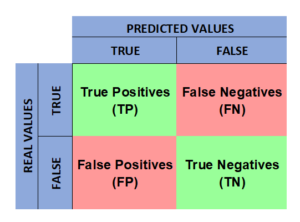

The table below shows a matrix of possible outcomes and indicates correct predictions in green (V) and incorrect predictions in red (X).

Accuracy works well in a setting, where the correct classification of each class is equally important, like in the dog breed classification case. To correctly classify german shepards is not more or less important than the classification of poodles.

Furthermore, it is important that classes are balanced in the dataset, otherwise accuracy might be misleading. I will come back to this requirement in the dataset section and show that it is met on the whole by the Udacity dog breed dataset.

To finalize my project, I built up a web app, combining the face detector, the dog detector and the Xception based CNN. It takes in a custom photo and identifies, whether a dog or a human is in the picture. The app will furthermore classify the breed of the dog or show the most resembling dog breed, if a human photo is uploaded. Try it out!

Analysis

Dataset

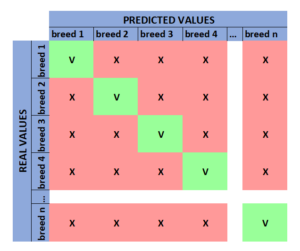

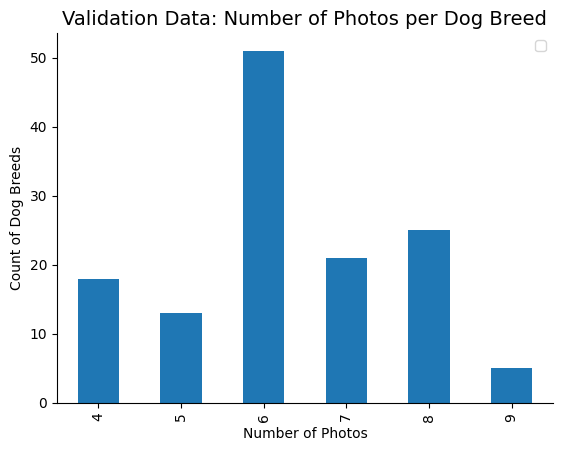

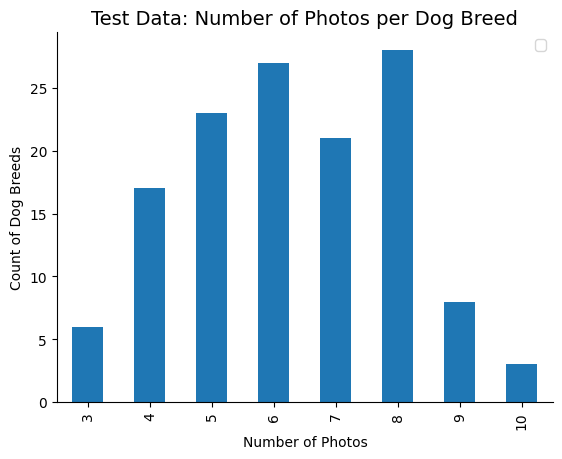

The dataset contains 8351 images of 133 different dog breeds. These images are subdivided into train data (80%), validation data (10%) and test data (10%). The images, however, are not uniformly distributed among the different breeds but range from 26 to 77 images per dog breed in the training data, from 4 to 9 in the validation data and from 3 to 10 in the test data. On average there are 50.2 images per dog breed in the training data and 6.3 images in the validation and test data.

At first glance, this deviation seems to be very huge. But when we consider the percentage of images per dog breed in the train data, it becomes clear, that it just differs between 0.39% (Norwegian Buhund and Xoloitzcuintli) and 1.15% (Alaskan Malamute) at a maximum. This deviation of shares is still acceptable to me for applying the accuracy metric.

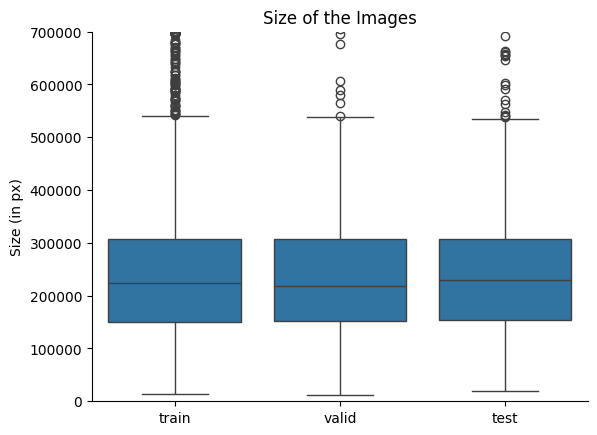

In the next step, I had a look at the size in px of the images. The chart below shows, that the size is evenly distributed across the training, validation and test images. In each group, there are some outliers, which are much larger in size, but the median size is similar across all types (training, validation and test data).

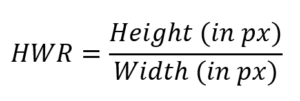

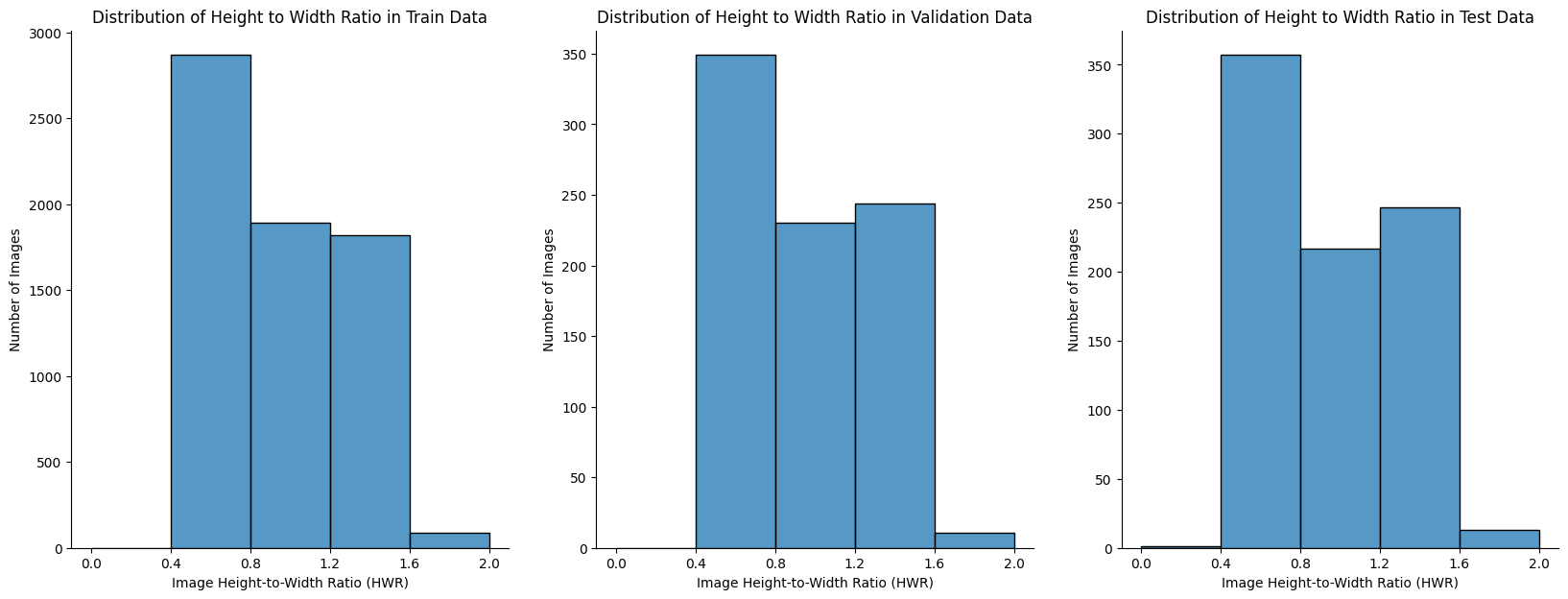

To get an idea about the alignment of the images, I calculated the ratio of the height in pixels divided by the width in pixels. Images in portrait format have ratios higher than one, while images in landscape format have height-to-width ratios of less than one. Squared images result in a height-to-width ratio of one.

The chart below shows several classes of height-to-width ratios. The highest number of images falls into the landscape class with a ratio smaller than 0.8. The number of images with almost square height-to-width ratio (0.8-1.2) and portrait height-to width ratio (>1.2) are smaller.

Furthermore a dataset of 13233 human images is provided by Udacity. Since I will only use the first 100 images to test the face and dog detector, I will not go into further detail here.

Face Detector and Dog Detector

To start with, I picked out a subset of 100 photos from each dataset to evaluate a face detector (FD). The face detector is based on the implementation of haar feature-based cascade classifiers by OpenCV, that detects the number of faces in an image. It returns True, when at least one human face is detected in an image or False, when no face is found in the picture.

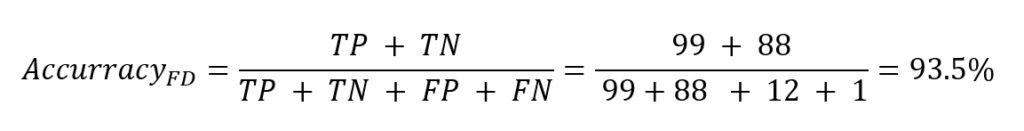

The accuracy for binary classifications can be calculated from True Positives (TP), True Negatives (TN), False Negatives (FP) and False Positives (FP), with TP and TN adding up to the correct predictions and FP and FN adding up to the incorrect predictions. It is defined by the following formula:

In case of the face detector, these are:

- True Positives: Pictures of humans, for which the face detector returns true –> correct result

- True Negatives: Pictures without humans, for which the face detector returns false –> correct result

- False Negatives: Pictures of humans, for which the face detector returns false –> incorrect result

- False Postives: Pictures without humans, for which the face detector returns true –>incorrect result

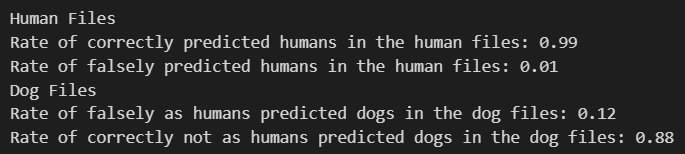

Testing the face detector on 100 photos of humans and 100 photos of dogs resulted in a 99% percent correct classification of human faces but also misclassified 12% of the dog photos as humans.

This coincides with an accuracy of the face detector of 93.5%.

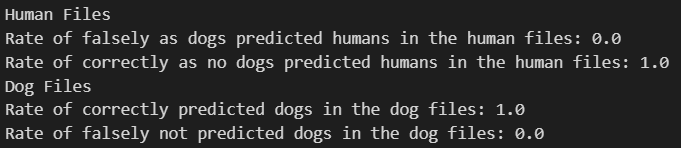

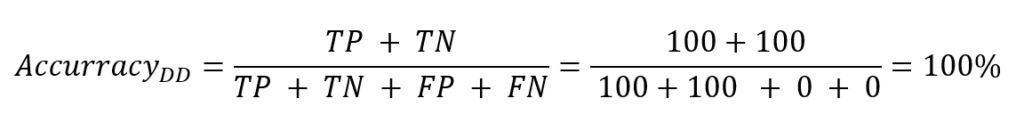

In the next step, I created a dog detector (DD) based on the ResNet-50 model. It detects, whether an image shows a dog and I tested it on the same dataset of 100 human photos and 100 dog photos.

This resulted in a much better accuracy. All dog pictures and human pictures were classified correctly, resulting in a test accuracy of 100%.

Distinguishing between a dog and a human is quite simple but what about distinguishing between 133 different dog breeds? This classification is reserved to real dog specialists among humans.

Dog Breed Classifier

Custom CNN

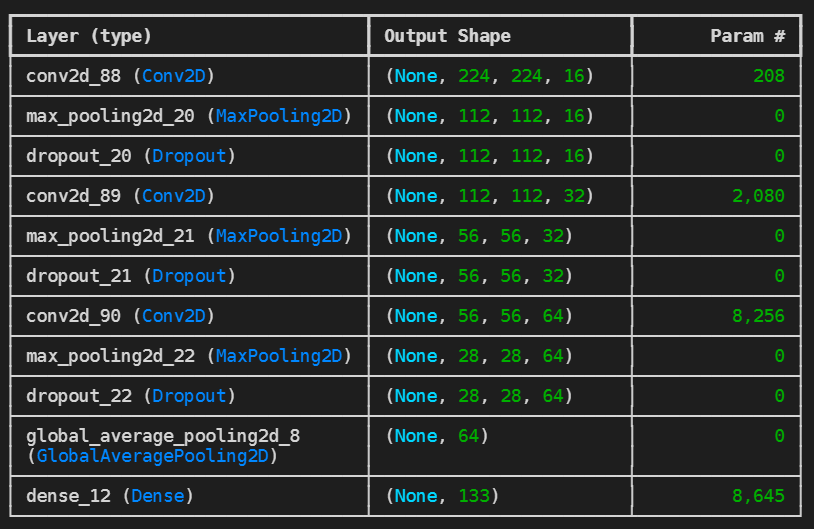

To start with, I set up my own CNN from the scratch, but to take it in advance, it really performed poorly.

The model consists of 3 convolutional layers, which each are followed by a pooling layer and a dropout layer. The dropout layers have been added to make the model more robust and prevent overfitting. The last two layers are a global average pooling layer and a dense layer with 133 nodes to match the 133 different dog breeds. The model was trained for 20 epochs, finally resulting in a test accuracy of about 8.5%.

Transfer learning based CNN (Xception)

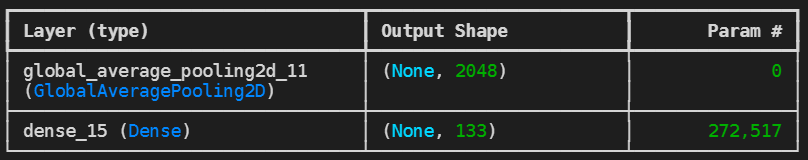

In the next step, I applied transfer learning by using and adapting the pre-trained CNN Xception. After the last convolutional layer, I added a global average pooling layer. It calculates the average of each feature map resulting from the Xception model. Thereby it makes sure, that there is a direct link between the final feature maps of Xception and the resulting dog breed categories, thereby avoiding overfitting. This layer is then followed by a Dense Layer (fully conncected layer) with 133 nodes, one node for each dog breed. For this layer, I have chosen a softmax activation function, to make sure, that the model outputs are continous probabilities. I trained the model for 20 epochs and end up with a very good test accurracy of 85.0%.

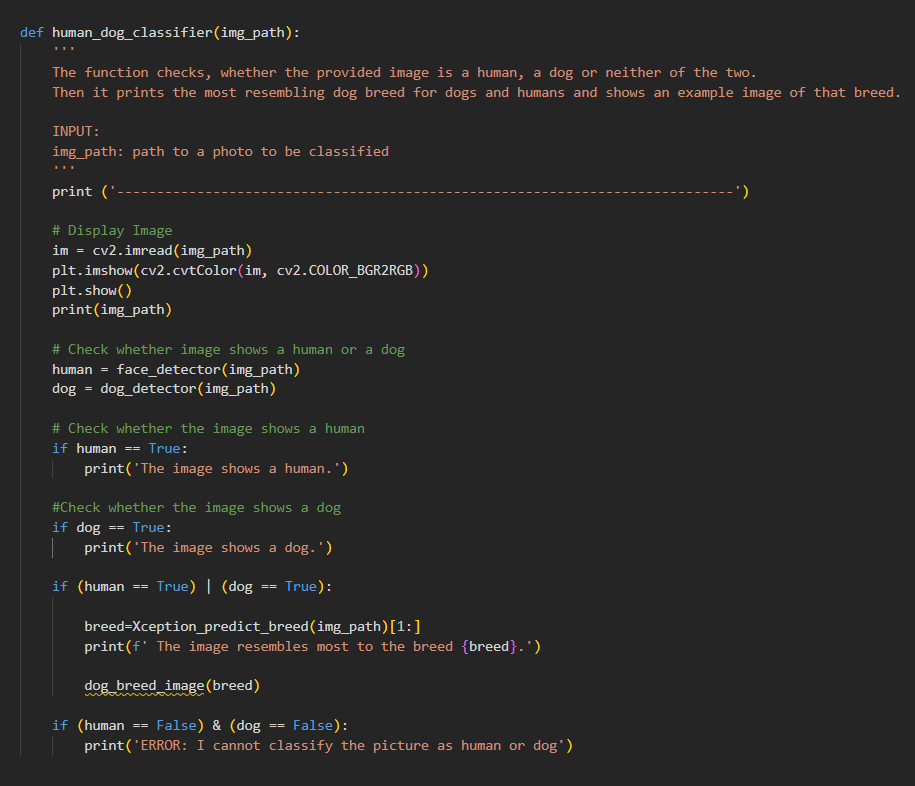

To make use of this model along with the face and dog detector, I wrote a set of functions, which not only give back the corresponding model results, but also print an example image of that dog breed and combined them in the function human_dog_classifier:

The algorithm takes in an image path and shows the image along with the file name. It then starts the face- and dog-detector and prints a string if at least one of the results is true. If a human or a dog is detected in the image, it also predicts the breed using the Xception-based model, prints the resulting breed and an example image of that breed. If neither human nor dog is detected, the algorithm prints an error message.

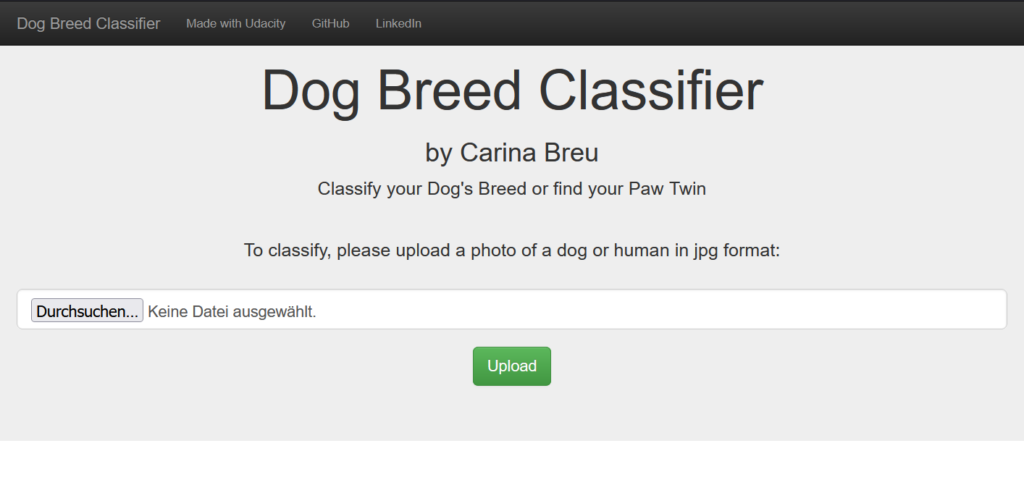

Web App

Finally I transferred my functions and the resulting model into a flask web app. To start with, I copied the file structure of my previous project on NLP and removed project related content to only keep the overall structure and layout.

I then added an upload input and button to the starting page. A small intro text and some examples on the starting page illustrate the purpose of the app to the user.

By clicking on the button, the user is forwarded to the classification result, showing the uploaded picture and an example picture of the dog breed, identified by the model. Behind the scenes, the dog app starts the face-detector, the dog-detector and the Xception based CNN to classify the provided image.

During the development phase, I started by uploading the user image to a folder on the server to make use of my previous code from the Jupyter Notebook which takes in file paths. This has had the backdraw, that when two users acted on the app at the same time, one by chance got the photo and the result of the other user. To fix this bug, I decided to change the code in the app and temporarily store the uploaded image in a variable to classify and display it in the app.

For more details about the code of my dog app, please refer to my Github project here.

Examples

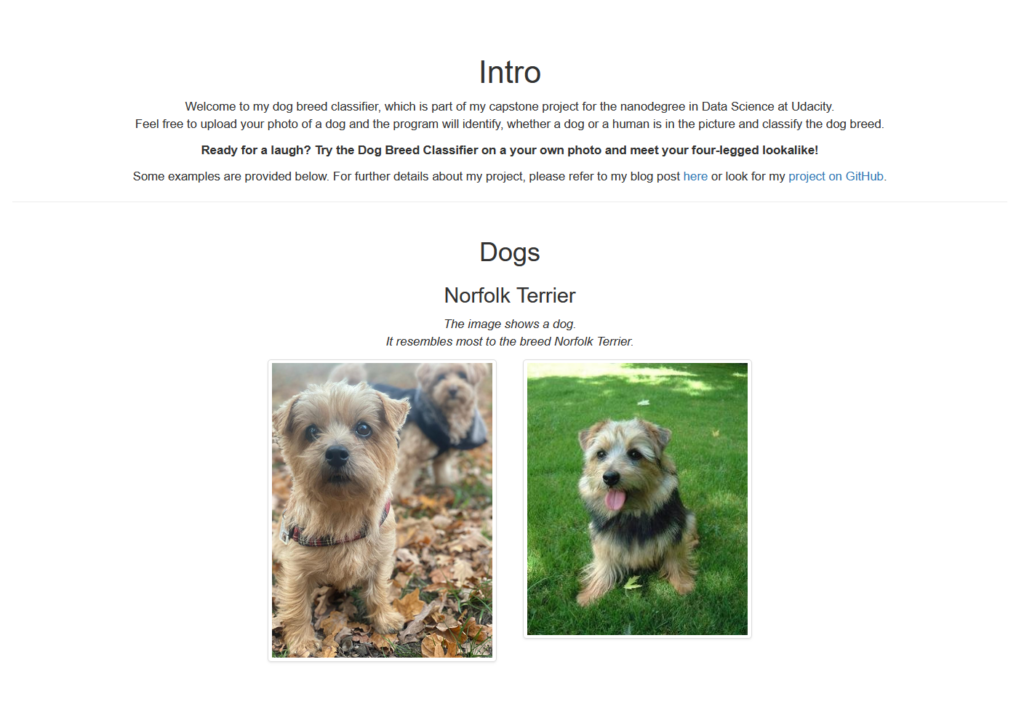

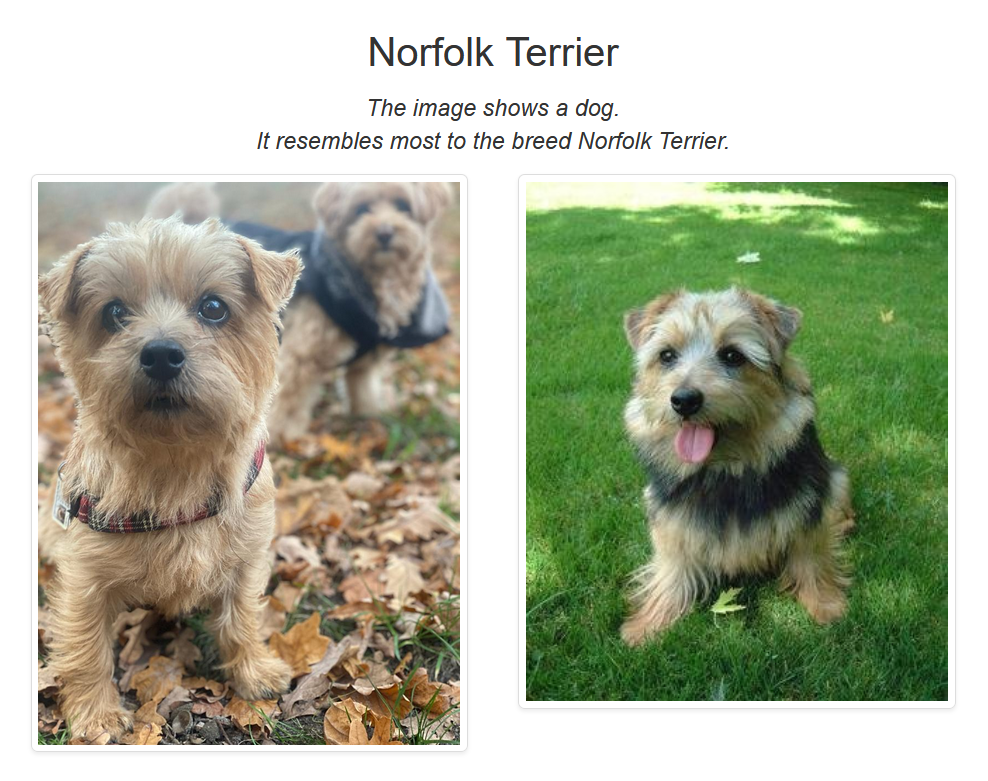

To get some examples, I asked a friend, to send me a photo of her friends dog Molly and its breed. I cannot put my pleasure into words, when my dog-app resulted in exactly the same breed, that my friend told me. A Norfolk Terrier!

Another friend sent me this picture of her beautiful dog Yuna. And again, the dog app classified her correctly as Australian Shephard!

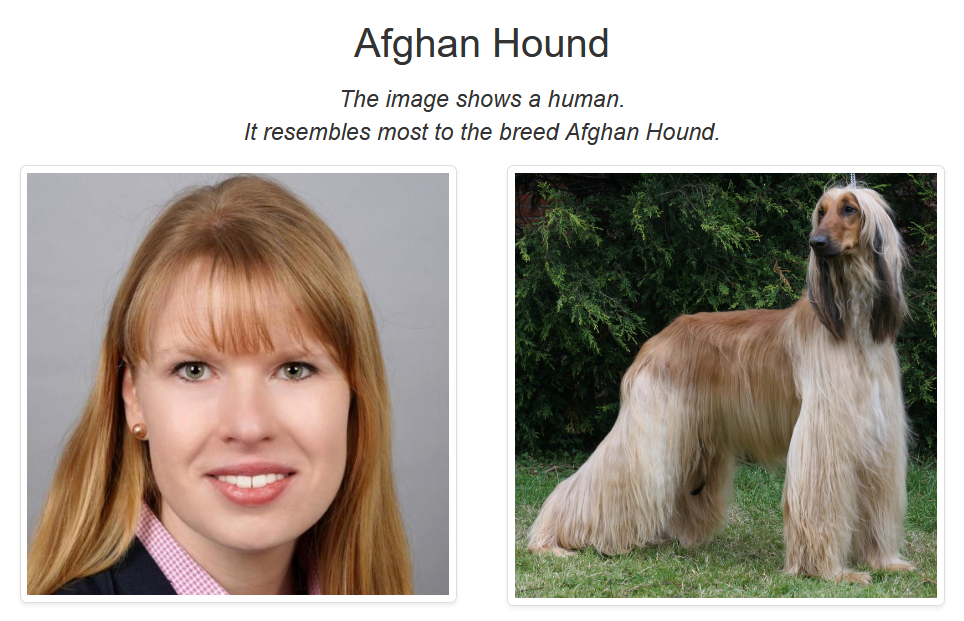

And it is not only perfect to identify dog breeds, but also works on human photos. When entering my own photo, it tells me, that I resemble most to an Afghan Hound. Have a look at the photos yourself. It somehow matches, doesn’t it?

Conclusion

The performance of my CNNs differ largely. Setting up my own CNN from scratch resulted in a very poor performance, with a test accuracy of less than 10%.

However, when using Xception, one of the famous and most powerful pretrained CNNs, as a starting point and adapting it to the dog breed case by transfer learning, I reached an incredible accuracy of 85.0 % on the test data set.

To improve the predescribed models, the dataset can be enlarged by collecting further images or by augmenting the training data.

Considering the algorithm of the dog-breed-classifier as such, numerical Ids for each dog breed could improve the processing time and reduce the probability of errors, when changing e.g. folder names. Adding some checks, would further increase reliability of the code, for example to make sure, that only images are processed. Finally it would be helpful, to add debug outputs, to improve the process in case of maintenance of the code and possible failures.

In practice, it is really astonishing, how exact the model classifies dogs among the 133 different dog breeds, a classification that can only be made by real canine experts! Not to mention the funny moments, when our kids identified the most resembling dogs of their parents with the Dog Breed Classifier App.

Ready for a laugh? Try my Dog Breed Classifier, upload your photo and meet your four-legged lookalike!

If you are interested in more details of my dog breed classification project, checkout my Github project here.